AI Is Not Coming for Your Job. It’s Coming for Something Else.

I think most of us are now starting to feel it, that unease when another AI demo goes viral. When our Twitter and LinkedIn feed gets filled with posts about ChatGPT writing code or generating designs or answering customer questions. When you read another headline declaring that your profession has five years left, maybe ten if you are lucky. Every few weeks, another influential voice in AI space declares an entire profession finished. And underneath all of it is a fear many people do not say out loud. A simple one. Am I still needed. You might not talk about it at work, but the thought sits there. What if they are right? What if I am next?

Let me slow you down for a moment. Not to reassure you. Not to tell you everything will be fine. But to ask you to think more clearly about what is actually happening. Because the question everyone keeps asking, the one that generates all this anxiety, is the wrong question. And wrong questions lead to panic instead of preparation.

The Question Everyone Is Asking Wrong

Here is what people keep asking: Will AI take my job? As if a job is a single, indivisible thing that either exists or does not. As if employment is a binary state that technology either preserves or destroys. But that is not how jobs work. That is not how they have ever worked.

People talk about jobs as if they are single objects that can be picked up and replaced. They are not. Jobs are bundles of tasks, responsibilities, judgment, and accountability. Some parts are visible and easy to describe. Others only show up when things go wrong. The mistake is focusing only on the visible tasks.

AI is very good at tasks. It is getting better fast. It can write, summarize, calculate, generate, and simulate. But humans are not paid simply because tasks exist. Humans are paid because someone needs to be responsible when outcomes matter. Responsibility is the part that does not show up in demos.

How the World Actually Works

Think about autopilot. Commercial airliners have had sophisticated autopilot systems for decades, in recent Boeing publication of Dec 2025, they are actively planning to further enhance the autopilot with Artificial Intelligence. These systems can handle takeoff, cruising, navigation, and landing. They can fly more smoothly than most humans. They can adjust to weather conditions, maintain optimal altitude, and follow flight paths with precision that would exhaust a person. And yet, when you board a plane, there are still two pilots in the cockpit. Not one. Two. Both extensively trained. Both certified. Both required by law.

This is not tradition. This is not regulatory lag. This is intentional design based on how the world actually works. Systems fail. Weather changes in ways algorithms do not expect. Engines malfunction. Runways close. Passengers have medical emergencies. And when any of those things happen, someone has to make a call. Someone has to own the outcome. Someone has to explain to two hundred people why the plan changed, and someone has to live with the consequences if they are wrong.

Autopilot did not take the pilot’s job. It took some of the pilot’s tasks. The rest, the parts that involve judgment under uncertainty and accountability for lives, stayed exactly where they were. Human.

Look at self-driving cars. We have vehicles now that can navigate highways, change lanes, park themselves, and handle stop-and-go traffic. Impressive technology. Real capability. And yet, even in the most advanced systems, there is still a steering wheel. There is still a driver’s seat. There is still a human who can take over when something goes wrong. If the technology were truly autonomous, if it genuinely replaced the need for human judgment, there would be no wheel. No pedals. No override.

But the override exists because the engineers who built these systems understand something fundamental: technology handles the expected, humans handle the unexpected. And the unexpected is where accountability lives.

Consider air traffic control. Radar systems track planes automatically. Software calculates optimal flight paths and spacing. Computers flag potential conflicts long before humans would notice them. And yet, controllers still sit in those towers. They still make final calls. They still ground planes when weather looks wrong or reroute traffic when something feels off. The automation made them more effective. It did not make them obsolete.

Or think about radiology. AI can scan thousands of medical images and flag anomalies with startling accuracy. It can spot patterns human eyes miss. It can process volume that would take a radiologist weeks. Hospitals are deploying these tools right now. And radiologists are still there. Still reviewing. Still making final determinations. Still signing off on diagnoses. Because when the AI flags something ambiguous, when the scan does not quite fit the pattern, when a patient’s history complicates the picture, someone with years of training and a medical license has to decide what happens next. That someone gets sued if they are wrong. The AI does not.

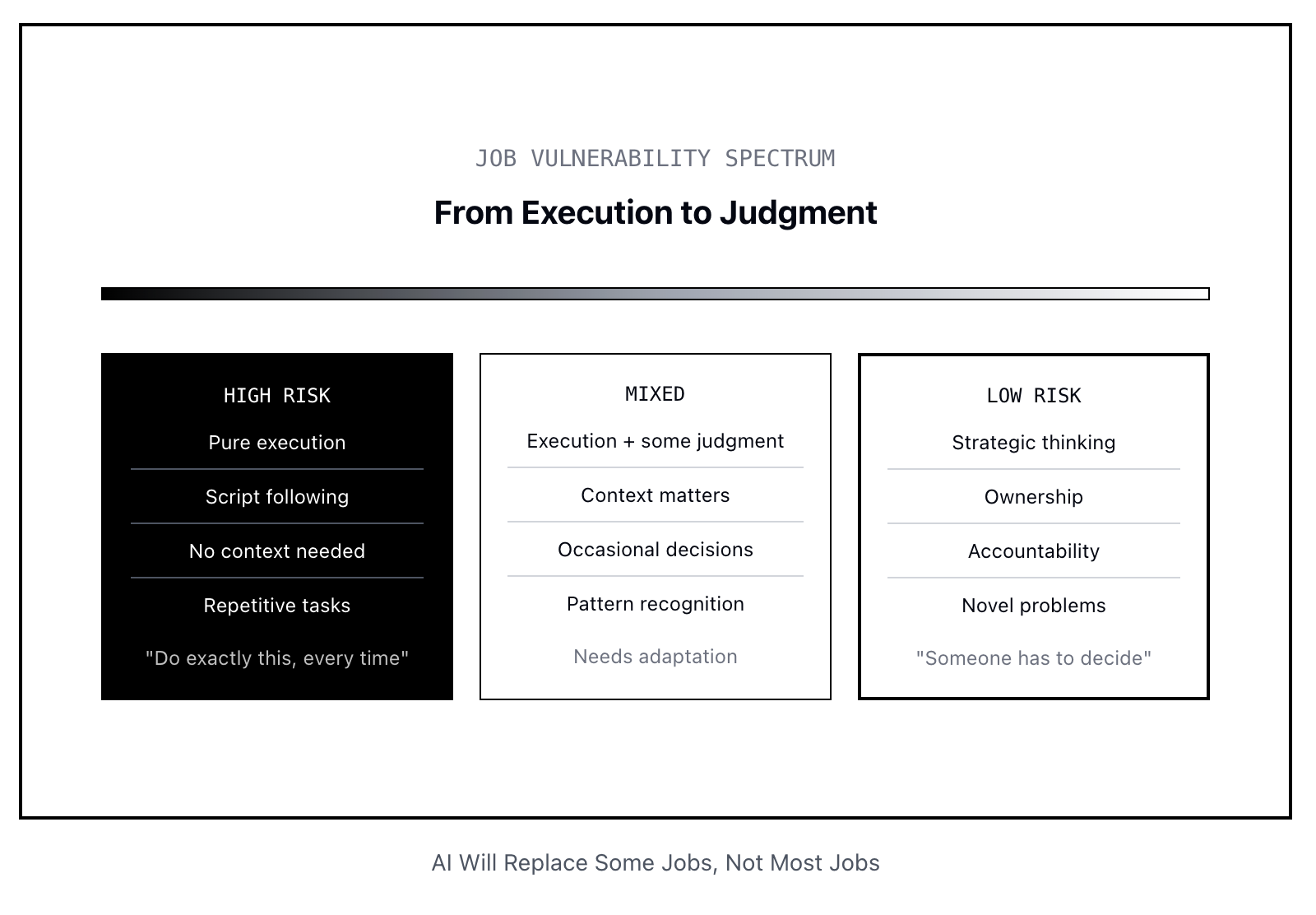

AI Will Replace Some Jobs, Not Most Jobs

Now, let me be clear about something. AI has replaced jobs. It will replace more. I am not going to pretend otherwise.

It has already eliminated roles that were entirely repetitive. Data entry positions that involved transcribing information from one system to another. Some contextual customer service jobs that followed scripts without deviation. Assembly work that required no adaptation. These jobs existed in the gap between when manual labor became too expensive and when automation became cheap enough. That gap is closing.

It will continue replacing work that is purely executional. Tasks with no context. Roles where success means following instructions exactly as written. Jobs that never required a person to make a call, own an outcome, or exercise judgment. If your entire job can be described as “do exactly this, every time, with no exceptions,” then yes, that job is at risk. It always was.

But most jobs are not like that. US Career institute in 2023, published a List of Jobs AI cannot replace, you can read it here. Most jobs, even ones that seem routine, involve more than execution. They involve knowing when to break the script. When to escalate. When to make an exception. When to say no. And those parts, the judgment parts, are what people actually get paid for.

What This Means for People Who Build Things

Let me talk about software engineering specifically, because I have been seeing tweets flying around on X recently, this is where I see the most anxiety and the most confusion.

AI can generate code. It can write functions, implement algorithms, and even scaffold entire features. It is genuinely useful. I use it. Most engineers I know use it. It has made certain tasks dramatically faster. But here is what it has not done: it has not eliminated the need for engineers. Because writing code is not the job. Writing code is a task within the job.

The job is understanding what to build. The job is knowing why one architecture will scale and another will collapse under load. The job is carrying a pager and waking up at 3am when production breaks, my team at Swiftspeed woke me up on Christmas eve to come and fix a bug that is not allowing users to publish their apps, why did they not just ask ChatGPT?. The job is making trade-offs between speed and correctness, between features and tech debt, between what the product team wants and what is actually possible. The job is looking at a system six months from now and knowing which decisions will haunt you.

AI does not do that. It cannot do that. It does not own anything. It does not carry responsibility. It does not get fired when the system goes down or promoted when the launch goes well. It generates code. You decide if that code ships.

The engineers most exposed right now are the ones whose entire role was executional. Junior developers who only ever implemented exactly what was specified. People who could code but never learned to think about systems. Roles that treated software as following instructions rather than making decisions. Those positions are getting compressed. Not because AI is better at engineering, but because those were not really engineering roles to begin with. They were coding roles. And coding, isolated from judgment, is a task.

The engineers who will thrive are the ones who understand that AI is a tool that makes them faster at the parts that do not matter and frees them to focus on the parts that do. Systems thinking. Architecture. Ownership. Responsibility. You cannot automate your way out of needing someone to make the hard calls.

The pattern holds across professions. Designers who only execute mockups are at risk. Designers who understand user psychology and make strategic decisions about experience are not. Writers who produce content to fill space are vulnerable. Writers who understand narrative, persuasion, and audience are amplified. Customer support agents who read from scripts will be replaced. Support professionals who solve novel problems and make customers feel heard will remain essential.

In every case, the work that survives is the work that involves judgment, context, and accountability. AI handles the routine. Humans handle the responsibility.

What You Should Actually Do About This

So what should you do with this information? Not panic. Panic is understandable, but it is not useful. It leads to frozen thinking. It makes you feel like a passenger in your own career. And you are not.

Here is a better frame: you are not competing against AI. That is the wrong contest. You are deciding whether to use AI as leverage or ignore it while others do. The people who will struggle are not the ones AI replaces. They are the ones who refuse to adapt while the work changes around them.

This does not mean you need to learn prompt engineering or take a course on machine learning. It means you need to move deliberately toward work that requires more judgment, more context, more ownership. It means looking at your role and asking which parts are purely executional and which parts involve making calls. Then making sure you are spending more time on the latter.

If you are a software engineer, stop just writing code. Start owning systems. Understand the business. Make architectural decisions. Carry production responsibility. If you are a designer, stop just making things pretty. Understand strategy. Learn to argue for user needs. Make trade-offs between ideal and achievable. If you are a writer, stop filling word counts. Understand persuasion. Build narrative. Develop a voice that matters.

The goal is not to beat AI. The goal is to do work that AI cannot do because it lacks skin in the game. Because it cannot be held accountable. Because it does not understand what is at stake.

AI is not coming for your job. It is coming for the tasks within your job that never required judgment in the first place. What it leaves behind is harder, more human, and more valuable. The question is whether you are ready to do that work, or whether you have been coasting on execution for so long that you have forgotten what judgment looks like.

The real risk is not the technology. The real risk is staying in place while the work moves. For me everything else is just noise.